Many programmers share the viewpoint “code is documentation enough” and do not invest time and effort into documenting their code. However, absent software documentation often is a sign of cognitive biases and poor management practices, rather than source code clarity. In turn, such attitude leads to an increase of cognitive load on programmers during program comprehension and maintenance, and poorer software quality overall.

A number of solutions for docs generation and referencing support the programmers in the documentation effort, though their adoption is not high and their applicability is not universal. Now we still depend on the first code author to document the what, the how, and the why of their code. And more so in the documentation than in the code itself.

In engineering and manufacturing, documentation accompanies the product during all of its life cycle. Typically, development begins with the design documentation. During product creation a blueprint becomes a bridge, a circuit diagram — a fitness tracker, a sequence of pictograms — yet another IKEA shelf, that weird sequence of abbreviations and a ball of yarn – a sweater, and so the list goes. After the product is released, the use and maintenance documentation come into play. The goal of all these manuals, care instructions, diagnostics and repair leaflets is simple — to make clear what the product is, how to use it, and how to repair or adjust it.

You might expect the same for software products — after all, the creation process is called software engineering. Yet the software engineers often are the very first people to forgo the documentation and some even argue that since the source code can be read and inspected directly, there is no need to explain it more, both when we are talking about internal documentation for other developers (e.g., source code comments, API interface documentation), and external one (like help pages and user manuals).

In 1976 Niklaus Wirth, a world-famous Swiss computer scientist, published one of the books that have been influencing the education of all generations of programmers since: “Algorithms + Data structures = Programs”. And while the book was great at the time to teach about algorithm design and data structure implementation, its primary goal was not to encompass the whole software development life cycle (SDLC). Computer programs do not exist in vacuum; they are written to process, generate, or otherwise manipulate data – which, in turn, has noise, gaps, or just sometimes does not match the initial expectations. So, while there are some initial assumptions taken during the software design phase about the data and the program’s expected behavior on it, it is the actual behavior in presence of the data that matters. The actual behavior is something that is observed, documented, adjusted, and the knowledge accumulated along the way is passed to each subsequent person working on that program.

And even in passing the knowledge on there are discrepancies, as a recent (2016) study by P. W. McBurney and C. McMillan shows [1]. The authors looked into the content of source code descriptive summaries within the comments, and discovered that first code authors write more about what the code does and why, keeping the focus on the “big picture” (and the expected program behavior), whereas all subsequent authors, who had to work with the same code, concentrated on the how part, leaving notes about interaction of the program components between themselves (the actual behavior), but not expanding the problem domain knowledge already present in the comments.

So why don’t programmers document their code consistently?

Several factors come into play here.

It does not seem necessary

One is the cognitive bias known as “the curse of knowledge”. I like the simple explanation of it on Wikipedia: “a cognitive bias that occurs when an individual, who is communicating with other individuals, assumes they have the background knowledge to understand”. In software development this translates directly into “I can understand my code [now] therefore it is clear enough for everyone else to understand”. The illusion of universal understanding does not take into account the hours spent on studying the problem domain and building an abstract model of it (and a quite a subjective model). Neither does it include additional hours spent on actually engineering, when understanding of the data and control flow accumulate and allow the developer to model the execution of the source code inside her head.

It seems to be there already

Another contributing factor is the similarity of source code to natural text in most cases nowadays. If we go back to the “good ol’ days” of first computers and punched cards, the actual executable code looked like sequences of holes in thick paper, and just looking at them would not give you much knowledge. Assembly languages, being the next evolution step, were a bit more human-readable, yet still involved dual thought flow – both program algorithm (the why, typically written in comments) and the way it is executed on a specific hardware architecture (concrete instruction sets comprising the how). Some decades later high-level and general-purpose programming languages and their compilers matured, with their syntax becoming more suitable to express abstract data structures and algorithms almost at the level of problem domain abstractions, and the work of translating these notations to actual, executable machine code was delegated to specialized software, the compiler. Nowadays high-level programming languages come in many flavors, allowing to write programs either in a quite verbose imperative way, focusing on execution steps, or one can opt for functional programming languages and express oneself closer to mathematical models underlying the software solution as possible, or focus on expressing the execution as logic propositions, or even go for all of it – one of the recent trends in software development is polyglot systems. This high-level code at times can look very similar to natural language – but it is not. You can even think of it as a foreign language, for which you do not have the dictionary (the documentation). You’ll get its meaning eventually, yes, but how much time would you need?

And, as usual, there is no time

And like with any engineering projects, poor management, be it individual or team one, has a very strong impact on documentation presence and quality. It has become a poor standard practice to value executable code more than its documentation – and as soon as the former is operational enough, the developers urge to continue with other implementation tasks. Plus, chances are the first team working on the code will not be the one supporting the code later thanks to the growing adoption of developer outsourcing/outstaffing business practice. Documentation writing takes time, and if the budget is tight, it is one of the first things to let go.

The actual cost of not documenting the code

Documentation is still produced, but indirectly, and it just costs all of us.

It costs oneself in the first place

Most textbooks and lectures on software development, when talking about the software development life cycle, will repeat over and over that most of the program lifetime is spent not in the development phase – but in the maintenance one. And a big part of the maintenance process is spent in an activity called “program comprehension” – basically, reading the code to understand what it does. A study from 2018 by Xia et al. [2] finds that “on average developers spend ~58 percent of their time on program comprehension activities, and that they frequently use web browsers and document editors to perform program comprehension activities.”. Let that sink in: almost 2 minutes out of every 3 for just understanding the source code.

It costs the developer community

While the development team might release the software product on the market without complete documentation, people will still look for it, as the study above indicates. StackOverflow [3], a “public platform building the definitive collection of coding questions & answers” is one of the resources developers use most (yes, I rely on it, too). Who writes the answers and the examples? The community. In their free time, in their work time, in their study time. A more entertaining “second shift”, if you ask me, also unpaid though somewhat more recognized. Additionally, community-sourced documentation has certain advantages for software products growth, as the NumPy case study [4] shows, one of the contributing factors being the diversity of the community members [5]. Yet documentation quality concerns still remain: how up to date and correct will such documentation be? Moreover, accessibility of the documentation de facto being hosted on a separate resource is definitely not optimal.

It costs the end users too

Poorly documented code, that is harder to understand, is also harder to test and control for quality, especially under the usual tight time pressure (have I already mentioned poor management?). As a result, errors and bugs in the software propagate into the real world, causing spacecraft crashes (think of the Mars Climate Orbiter crashing due to one part of the code processing the sensor data in metric and another in imperial units), medical equipment doing more harm than good and leading to patient deaths (Therac-25 radiation therapy device and race conditions during software safety checks), and many more – numerous articles on “N worst computer bugs in history” might give you some ideas how far it can go.

Solutions?

Nobody will argue that producing documentation is easy. We, scientists and practitioners, are still working on the tool support for it, to lessen this burden. So, while there is no one solution (and likely there will never be), several existing ones can be combined in most cases.

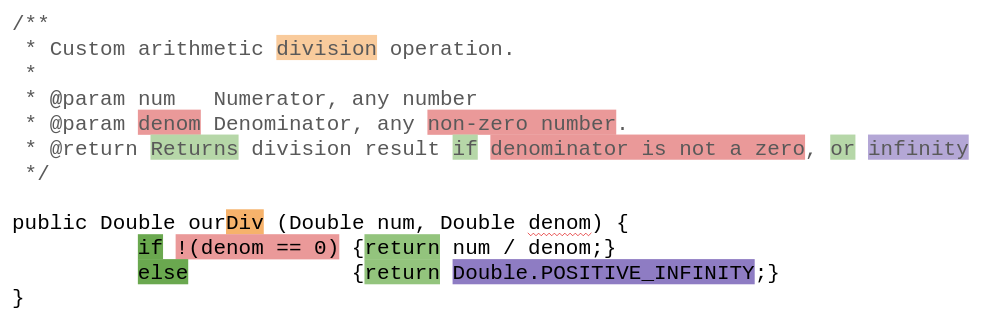

Increasing code readability for humans

One of the efforts the practitioner community has offered are guidelines and conventions on meaningfully naming, structuring, formatting, and also documenting the source code. The benefits of following those guidelines are having a more uniform code base, which is critical when numerous different people contribute over disjoint periods of time. Good news is that programmers do tend to follow the guidelines, especially the parts about the content, documenting their knowledge. Not so good news is that the code linter tool support for documentation conformance to guideline standards focuses instead mostly on the formatting of that documentation [6].

Adding more code still: testing

Some would argue that code is enough to understand the algorithms, and tests are enough to showcase the API use and both expected and undesirable program executions. There might even be enough tests written to account of edge cases. And testing frameworks greatly help in automating test case generation and execution. Moreover, the team might be following a development approach that prioritizes the tests from the beginning – like test- or behavior-driven development (TDD or BDD, respectively). However, this approach in principle is incomplete – producing a test suite that will consider all possible executions for all program units will require too much effort, and additionally at times testing in the eyes of management falls into the same category as documentation – barest minimum possible. Please refer above again to the part about bugs having real world consequences.

Adding formal contracts: between code and text

Some programming languages allow attaching contracts or assertions of some sort to specific methods and program points. Contracts are executable specifications that typically contain properties of program constructs: is this variable non-null, is this array sorted, is this array index always within the array’s boundaries, etc. They complement the type systems in typed languages (think of C++ and Java) and can provide some additional correctness checks in the untyped languages (think of Python). Just like with tests, contracts are executed by specialized verification frameworks. One big advantage of using them is that unlike tests they allow for completeness, as the verification framework analyses whether the contract holds or not for all possible executions. However, adoption of contracts is still low due to the factors like (a) additional cost for a developer to learn a new formalism – the contract language, (b) limited to low-level expressiveness of the properties that can be included to the contract: signs and value ranges of numeric variables, array properties, nullness of pointers and variables, (c) program analyses to prove that those properties hold or not are extremely hard to develop.

Generating documentation

Modern interactive development environments (IDEs) allow to generate documentation stubs, or templates to be filled with text. In theory, another tool, a code linter, will be able to check if the comment is still empty and notify the programmer, asking to improve the documentation. In practice, however, some linters will just check if a comment is present, so even an empty one will satisfy the check.

As for generating documentation automatically from the source code, the problem known in the software engineering researcher community as “software summarization”, the research to date has mainly focused around one issue: the code itself does not contain enough information (=is not enough!) to produce natural language text that is meaningful to humans. Dozens of publications over the past two decades center on addressing the problem of how to get the relevant context information. Successful prototype documentation generators rely on outputs of static analyses, using natural language information in the program identifiers, tracing high-level project documentation with information retrieval methods, and using human-produced templates as a base to be filled with that information.

Developing both code and documentation simultaneously

Another approach to having the documentation for the code is to evolve the code and the docs simultaneously, intertwining them, and treating them both as equally important software components (which seems natural given that high-level programming languages designed for human readability dominate the software development technology market). One good example here is the gradual adoption of the “live documentation” technology in agile software development, mostly in the teams applying a behavior-driven development workflow. Here, with some additional tool support, programmers can create high-level documentation – like requirement documents with domain concepts – and link them to the executable source code directly. More commonly though the domain model and the development-specific information will reside in an internal wiki-like format; example software solutions being Confluence, GitLab, Phabricator, or Notion, and the links to the source code will be added manually.

In the same category falls literate programming, a paradigm introduced by Donald Knuth, another world-famous American computer scientist, in 1984. The main idea behind it is to follow the natural flow and expression of human thought, and support it with program execution, and not the other way around, where constraints and order of program execution guide the thought. A well-known example of such programs is JuPyter notebooks – interactive environments capable of processing text documents containing source code snippets in them.

And now the cherry on the top:

Documentation is code enough (Good news everyone!)

And we might have been solving the wrong problem all this time.

Even the simplest case of documentation, the source code comments, if written with enough detail can guide automated code generation rather successfully. A prominent example is GitHub Copilot [7], a code auto-complete tool which relies on a neural network trained on documented source code. While its intended use is primarily aiding the programmers in source code writing, the tool is able to generate snippets based on the comment given by a programmer.

Processing natural language information to improve source code overall turns out to work much better than trying to get anything from the source code by itself [8]. Slowly but surely documentation becomes an indispensable information source also for program analysis [9] and software testing automation [10]. The core idea here is quite simple: natural language used in the technical texts is rather restricted both in its vocabulary and in the use of grammatical structures. So as long, as specific patterns like “X is not null” can be reliably extracted, they can be turned into executable pieces of test source code or verification contracts.

References and links

Please note that preprints of all the papers mentioned below are typically available for free personal use on the web pages of the authors.

[1] P. W. McBurney, C. McMillan (2016): “An empirical study of the textual similarity between source code and source code summaries”. Empirical Software Engineering 21, 17–42. https://doi.org/10.1007/s10664-014-9344-6

[2] X. Xia, L. Bao, D. Lo, Z. Xing, A. E. Hassan and S. Li (2018): “Measuring Program Comprehension: A Large-Scale Field Study with Professionals”. IEEE Transactions on Software Engineering 44(10), 951–976. https://doi.org/10.1109/TSE.2017.2734091

[3] https://stackoverflow.com/

[4] A. Pawlik, J. Segal, H. Sharp and M. Petre (2015): “Crowdsourcing Scientific Software Documentation: A Case Study of the NumPy Documentation Project”. Computing in Science & Engineering 17(1), 28–36. https://doi.org/10.1109/MCSE.2014.93.

[5] B. Vasilescu, D.Posnett, B. Ray, M. G. J. van den Brand, A. Serebrenik, P. Devanbu, and V. Filkov (2015): “Gender and Tenure Diversity in GitHub Teams”. In 33rd Annual ACM Conference on Human Factors in Computing Systems. https://doi.org/10.1145/2702123.2702549

[6] P. Rani, S. Abukar, N. Stulova, A. Bergel and O. Nierstrasz (2021). “Do Comments follow Commenting Conventions? A Case Study in Java and Python”. In 21st IEEE International Working Conference on Source Code Analysis and Manipulation, pp. 165–169. https://dx.doi.org/10.1109/SCAM52516.2021.00028

[7] https://copilot.github.com/

[8] M. D. Ernst (2017): “Natural language is a programming language: Applying natural language processing to software development”. In 2nd Summit on Advances in Programming Languages, https://dx.doi.org/10.4230/LIPIcs.SNAPL.2017.4

[9] Y. Zhou, R. Gu, T. Chen, Z. Huang, S. Panichella and H. Gall (2017): “Analyzing APIs Documentation and Code to Detect Directive Defects”. In 39th International Conference on Software Engineering. https://doi.org/10.1109/ICSE.2017.11

[10] A. Blasi, A. Goffi, K. Kuznetsov, A. Gorla, M. D. Ernst, M. Pezzè, and S. Delgado Castellanos (2018): “Translating Code Comments to Procedure Specifications”. In 27th ACM SIGSOFT International Symposium on Software Testing and Analysis. https://doi.org/10.1145/3213846.3213872

Dr. Nataliia Stulova is a software engineer and a computer science researcher with 10+ years of experience in software analysis and development. Born in Dnipro, Ukraine, she kept the family tradition of the previous two generations to work in STEM, but went for IT instead of rocket engineering. In her scientific work she has been focusing on developing tools to keep software and its specifications aligned at different SDLC stages. She works with software requirement documents, source code comments, and formal program specifications, combining and matching the information in them to ensure program and its documentation correctness. During her time in Switzerland she had collaborated with scientists from EPFL, University of Bern, and ZHAW.